Automotive Safety Rules Embedded Vision Summit

Although at this week’s Embedded Vision Summit explored new applications for vision, automotive safety still drives the agenda.

The consensus from the Embedded Vision Summit (May 2–4) in Santa Clara this week: We have the programming tools, trainable algorithms, growing data sets and powerful processors to build vision systems with deep learning intelligence. Point an intelligent camera at an unfamiliar object (or a picture of an unfamiliar object) and your vision system will tell you exactly what you’re looking at. This is particularly useful in automotive safety applications (like ADAS) where your front-view cameras will identify—with amazing fidelity—cyclists and pedestrians on the road with you.

While many presenters at the three-day conference could offer quotable opinions on ADAS, very few would tell you what might displace automotive safety as the vision industry’s “next big thing.” Automotive safety rules.

By itself, the Embedded Vision Summit serves as a signpost for the image processing industry, and related applications depending on vision and artificial intelligence. Drawing over 1,000 engineers, product and software developers (as well as venture capitalists), the Embedded Vision Summit speakers cataloged state-of-the-art hardware and software tools that would “make sense” out of captured visual information. Some analysts and VCs even offered clues for monetizing the collected data.

Automotive safety has emerged as the most visible (and potentially lucrative) application for embedded vision. With approximately 100 million new cars coming onto the road each year, automotive safety should not be taken lightly, reminds Jeff Bier, president of consultancy Berkeley Design Technology, Inc. (BDTI) and organizer of the Embedded Vision Summit. Coupled with automatic braking, active suspensions and road surface mapping, automotive driver assist systems (ADAS) have the potential to save lives and prevent accidents. Future applications of embedded vision, like “watching your ‘stuff’,” will likely be waiting in the wings, Bier said.

In addition to pedestrian recognition, vision will enable autonomous driving. ADAS demand will grow 19.2% CAGR from 2015 to 2020, reaching $19.9 billion that year, claimed Qualcomm’s senior VP Raj Talluri, citing projections by Strategy Analytics. The vision software and hardware that supports autonomous vehicles will also support mobile robots and drones (29 million units in 2021). What other applications will embedded vision enable? conference attendees wanted to know.

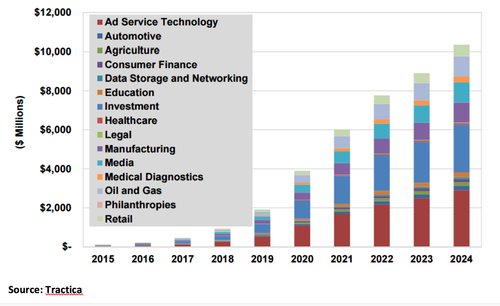

Bruce Daley, principle analyst at Tractica cited factory automation and agriculture applications in his presentation. These applications, like ADAS, will likely depend on Neural Network intelligence. By itself (without hardware), Neural Network software accounted for $109 million in 2015, and will grow to $10.4 billion by 2024, Daley says.

The market driver, he says, is a virtual flood of data. Like the Internet of Things (IoT), embedded vision will generate truckloads. Apart from the issues of privacy and security, users will want to know, what do you do with The Data? Do you sell it? Do you analyze it… and sell the analysis? Do you sell analysis tools? Trainable deep learning algorithms are required to analyze the data, Daley reminds. The likely neural network analysis steps include image capture and digitization, DSP (including convolutional) analysis of the data, and the construction of sellable maps which serve as the foundation for a viable business. In one particular use case—agriculture—satellite images are rendered into maps which tell farmers about their land, where they would find moisture and plant-friendly soil.

Other deep learning markets identified by Tractica include static image processing, in which the content of photos are recognized, tagged, stored and retrieved on request. Companies like Google and Facebook, Daley estimates, use deep learning to identify objects within some 350 new photos a day. An index of these photos would also support personalized ad servers (see figure 1)

Figure 1

Figure 1: Deep learning software revenue by industry, worldwide 2015-2024 (Source: Tractica)

Software and hardware required to facilitate deep learning

Deep Learning techniques have dramatically improved the performance of computer visions tasks such as object recognition, confirms Qualcomm’s Raj Tullari. Dramatic Intelligence in mobile and embedded devices enables what he calls “the ubiquitous use of vision.”

Cell phone vision applications require data extraction and processing closest to the source. While it complements cloud computing, rather than displacing it, local processing of images requires a strong computational ability—1000 MIPs for object edge and motion detection, and up to one million MIPs for full computer vision (i.e., “tell me what I’m looking at”).

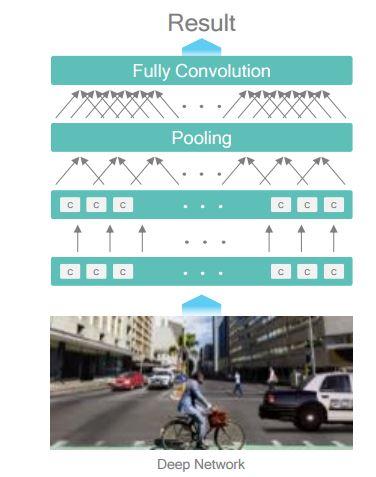

For a pedestrian crossing in traffic, says Talluri, a car approaching an intersection at 60km/hour would have 0.6 seconds to resolve the content of a VGA image. An 8K image, in contrast, could be resolved in 8.4 seconds (with a specialized processor) — giving the driver more time to slam on his brakes — but this requires 108 times more compute power. Recent mobile device improvements — enhancements in cell phone computing and memory architectures, camera sensors and optics—improve image detection and response times (See Figure 2).

Figure 2

Figure 2: Recognizing cyclists and pedestrians in traffic make several successive convolutions. (Source: Qualcomm)

Google’s Brain team

The role of evolving neural network models — Convolutional Neural Networks (CNN) and Recurrent Neural Networks (RNN) — was perhaps best articulated by keynoter Jeff Dean, who heads the Brain team at Google. The most important property of neural networks, Dean explains, is their results get better with more data, bigger models, and more computation. (Of course, better data shaping algorithms, new insights and improved DSP computational techniques improve the learning process and speed results.)

The models Google evolved were developed by looking at pictures with varying content and degrees of complexity and asking what characterizes our degree of “understanding.” Simply stated, how would you describe what you see? For example:

- “A young girl asleep on the sofa cuddling a stuffed bear,” described by a human observer.

- “A baby is asleep next to a teddy bear,” described by one of the compute models.

- “A close up of a child holding a stuffed animal,” described by another compute model.

What Google derived, says Dean, was a class of machine learning tools that embodied a collection of simple, trainable mathematical functions — capable of running on (say) 100 different platforms (CPUs, GPUs, DSPs and FPGAs) at the same time. What Google calls its TensorFlow engine enables “large-scale machine learning on heterogeneous distributed systems.”

There are two stages in the classification of an image: The first part includes training on raw data and its classification (by large, distributed platforms, like ImageNet). The second stage consists of deployment of recognition/identification modules (which run on mobile platforms). The computational model is described as a data flow graph, constructed in C++ for efficiency, running on Android and iOS — which enables complex searches on cell phones.

Performing convolutions within convolutions — searching photos without tags — the “GoogLeNet” engine reduced raw image identification errors to less than 6.6% (better than AlexNet — an older modeler — with 16.4% erroneous returns).

In addition to visual imagery, Googles inception engine can process text (with trillions of words of English and other languages), audio (with tens of thousands of hours of speech per day) and user activity (queries, marking messages spam, etc.).

Access to the inception engine is available through Google APIs including Cloud Speech API (speech to text), Cloud Vision API, Cloud Text API for sentiment analysis and the Cloud Translate API.

Cyclists and pedestrians everywhere

There was no lack of hardware, capable of running complex convolutions, on display at the embedded vision conference. FPGA makers — Intel/Altera and Xilinx—demonstrated ADAS object detection systems, with pedestrians and cyclists clearly highlighted. Intel’s CNN-based pedestrian detector was running on an Altera Arria 10 accelerator board, with software from i-Abra. The FPGA offers a smaller computational footprint GPUs, Intel claims, and requires much lower power to process each pixel. (The Xilinx cyclist detector ran on a Zyng UltraScale Plus board, using 64-bit ARM cores.)

Qualcomm’s soon-to-be-introduced Snapdragon 820 and software development kit (SDK) is touted as a Neural Network Processing Engine, providing accelerated runtime for the execution of deep and recurrent neural networks.

Cadence Tensilica’s Vision P6 processor targets embedded neural network applications. Cadence’s Chris Rowan actually compared his processors’ performance with AlexNet, thought to consume 800 million MACs (60 million model parameters) for each image it handles. Training a vision system to recognize objects requires between 1016 to 1022 MACs per data set. Cadence will sell the silicon IP required to process neural network convolutions. Custom DSPs using this IP can resolve tradeoffs in energy consumption and processing bandwidth.

In his talk on automotive image processing, NXPs (Tom Wilson) said ADAS currently accounts for $11billion in revenues, which will grow to $130 billion He cited ABI Research (with a different, larger number than that cited by Qualcomm earlier).

There are different levels of driver assistance, he reminded, each requiring tradeoffs in processor requirements, cost points and energy consumption. There are different requirements for partially autonomous vehicles, semi-autonomous, and fully autonomous vehicles. In each case, you want to know what surrounds the car. Automotive Lidar offers very high resolution, Wilson said, but only extends 100 meters from the car. Automotive radar extends 250 meters, but offers little resolution for object detection. Cameras give you high resolution to 150 meters.

There are issues associated with each of these. Velocity detection, for example, is a capability built-into radar systems. Doppler waveforms are valuable in determining whether objects are approaching or retreating, and how fast. But it doesn’t give you very good edge detection. Lidar can give you a 360-degree scan, but needs special processing to tell you what you’re looking at. The denser image data requires more local compute power. Hence, there are tradeoffs costs and detection capability.

Marco Jacobs of Videantis praises camera scans for their ability to extend driver’s visibility. The image processing pipeline will include 360-degree imagery and 3D video, though the actual vision path (what the car needs to sees) is different from the display path (what the driver needs to see). The car determines a pedestrian has stepped off the curb before driver is alerted.

This complicates the image processing. The International Organization of Motor Vehicle Manufacturers, known as the “Organisation Internationale des Constructeurs d’Automobiles” (OICA), identifies five levels of automation. At Level 0, the driver controls thee car. At Level 1, the car steers and controls speed. On Level 2, the car drives, but the driver must monitor and assert control when necessary. On Level 3, the car drives… but asks the driver to step in under certain situations. On Level 4, the car exerts total control on defined use cases. On Level 5, says Jacobs, we experience a massive paradygm shift.

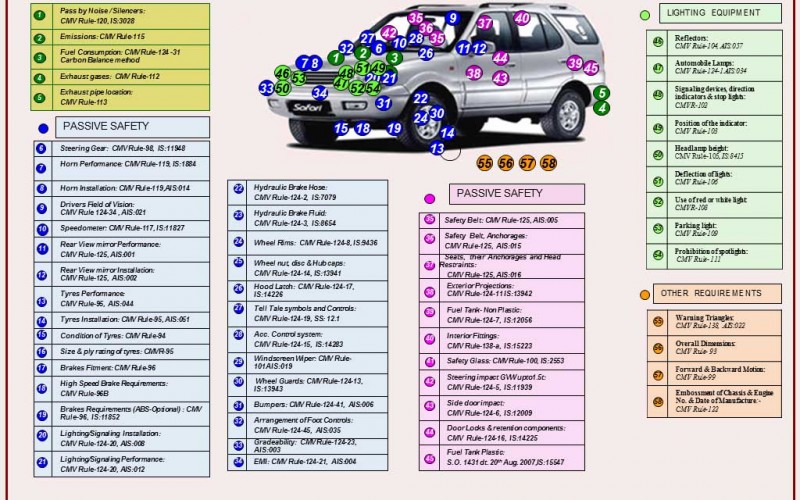

U.S. Department of Transportation’s National Highway Traffic Safety Administration (NHTSA) mandates rear-facing cameras on all light vehicles (cars below 10,000 pounds by May 2018. The New Car Assessment Program (NCAP) requires front facing cameras on all cars by 2020

As much as 25% of car costs will be electronics, Jacobs concludes. This will grow: As many as 250 electronic control units (ECUs) will populate the typical high-end car in the near future. Distributed processing allows intelligence options to be added or subtracted “plug and play,” but this will be handled only by more complex coupling of the image processing system (an issue camera sensor maker Basler is attempting to address with the replacement of USB by LVDS cabling).

—Stephan Ohr is a former director for Semiconductor Research at Gartner, where he tracked analog and power management ICs with forecasts, market share analyses, and client advisories. Prior to his 10 years at Gartner, Ohr was editor in chief of Planet Analog and an editor on EE Times.